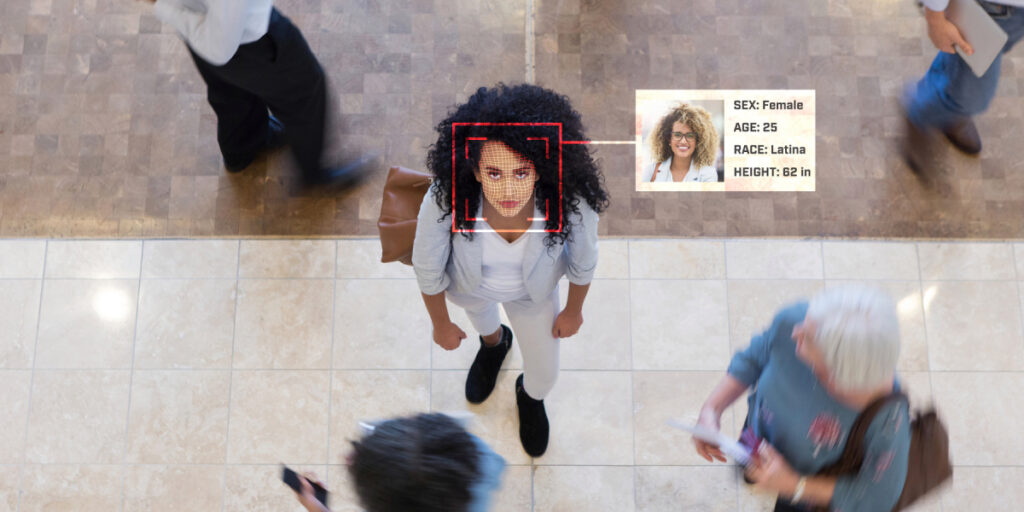

An Affiliation for Computing Equipment (ACM) tech coverage group immediately urged lawmakers to right away droop use of facial recognition by companies and governments, citing documented ethnic, racial, and gender bias. In a letter (PDF) launched immediately by the U.S. Expertise Coverage Committee (USTPC), the group acknowledges the tech is predicted to enhance sooner or later however will not be but “sufficiently mature” and is subsequently a menace to folks’s human and authorized rights.

“The implications of such bias, USTPC notes, incessantly can and do prolong properly past inconvenience to profound damage, notably to the lives, livelihoods, and elementary rights of people in particular demographic teams, together with a number of the most weak populations in our society,” the letter reads.

Organizations learning use of the expertise, just like the Perpetual Lineup Challenge from Georgetown College, conclude that broad deployment of the tech will negatively influence the lives of Black folks in the USA. Privateness and racial justice advocacy teams just like the ACLU and the Algorithmic Justice League have supported halts to the usage of the facial recognition up to now, however with practically 100,000 members around the globe, ACM is without doubt one of the greatest pc science organizations on the earth. ACM additionally hosts giant AI annual conferences like Siggraph and the Worldwide Convention on Supercomputing (ICS).

The letter additionally prescribes ideas for facial recognition regulation surrounding points like accuracy, transparency, danger administration, and accountability. Beneficial ideas embrace:

VB Rework 2020 On-line – July 15-17. Be part of main AI executives: Register for the free livestream.

- Disaggregate system error charges primarily based on race, gender, intercourse, and different applicable demographics

- Facial recognition programs should endure third-party audits and “sturdy authorities oversight”

- Individuals should be notified when facial recognition is in use, and applicable use instances should be outlined earlier than deployment

- Organizations utilizing facial recognition needs to be held accountable if or when a facial recognition system causes an individual hurt

The letter doesn’t name for a everlasting ban on facial recognition, however a short lived moratorium till accuracy requirements for race and gender efficiency, in addition to legal guidelines and rules, could be put in place. Exams of main facial recognition programs in 2018 and 2019 by the Gender Shades undertaking and then the Division of Commerce’s NIST discovered facial recognition programs exhibited race and gender bias, in addition to poor efficiency on individuals who don’t conform to a single gender id.

The committee’s assertion comes on the finish of what’s been a historic month for facial recognition software program. Final week, members of Congress from the Senate and Home of Representatives launched laws that will prohibit federal workers from utilizing facial recognition and lower funding for state and native governments who selected to proceed utilizing the expertise. Lawmakers on a metropolis, state, and nationwide degree contemplating regulation of facial recognition incessantly cite bias as a serious motivator to cross laws towards its use. And Amazon, IBM, and Microsoft halted or ended sale of facial recognition for police shortly after the peak of Black Lives Matter protests that unfold to greater than 2,000 cities throughout the U.S.

Citing race and gender bias and misidentification, Boston turned one of many greatest cities within the U.S. to impose a facial recognition ban. That very same day, folks realized the story of Detroit resident Robert Williams, who’s considered the primary individual falsely arrested and charged with against the law due to defective facial recognition. Detroit police chief James Craig stated Monday that facial recognition software program that Detroit makes use of is inaccurate 96% of the time.