Apple sued over 2022 dropping of CSAM detection features

A victim of childhood sexual abuse is suing Apple over its 2022 dropping of a previously-announced plan to scan images stored in iCloud for child sexual abuse material.

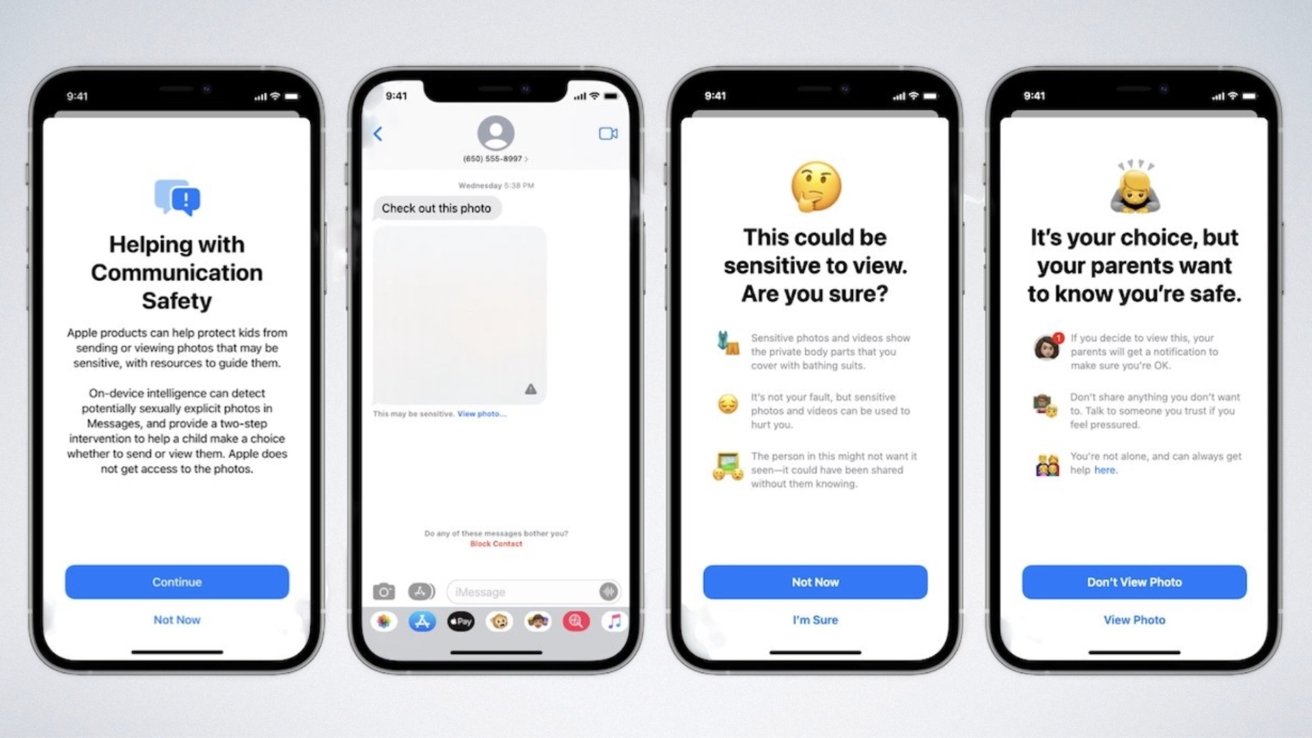

Apple originally introduced a plan in late 2021 to protect users from child sexual abuse material (CSAM) by scanning uploaded images on-device using a hashtag system. It would also warn users before sending or receiving photos with algorithically-detected nudity.

The nudity-detection feature, called Communication Safety, is still in place today. However, Apple dropped its plan for CSAM detection after backlash from privacy experts, child safety groups, and governments.

Continue Reading on AppleInsider | Discuss on our Forums

Source: AppleInsider News

Recent Posts

NASA Astronaut Don Pettit Uses His Camera for Science in Space

NASA astronaut Don Pettit, known for his incredible astrophotography, sense of humor, and clever camera…

WhatsApp now lets users create their own sticker packs

WhatsApp this week released a major update that adds a new way to create and…

Ben Affleck Has a New Batman Complaint: Wearing the Batsuit Sucked

The actor has long since moved on, but he'll forever be asked about his DC…

LEGO Easter Sale Drops Deals Too Sweet to Miss, Hurry This Won’t Last Long

Dozens of awesome building kits for all ages are marked down from now through April…

This Reminders feature is a lifesaver when my task list grows too long

Apple’s Reminders app has become a powerful task manager in recent years, and there’s one…

Trump Weaponizes Bureaucratic Review to Stop Offshore Wind Project

What happened to cutting red tape?